Whenever we create a simple node js and express application, it runs with main process without any cluster functionality. Generally, application works well except in some scenarios where a particular incoming request is taking more time. In such cases, the main process remains occupied with this request and in the mean time if any request to any other route comes, it will wait till the completion of previous request. It can be understood with below code.

const express = require('express');

const port = 8000;

const app = express();

app.get('/', (req, res) => {

console.log(`Incoming req accepted by Process ${process.pid}`);

for(let i=0; i<9999999999999999999; i++) {

}

res.send('hello world');

});

app.get('/test', (req, res) => {

console.log(`Incoming req accepted by Process ${process.pid}`);

res.send('Quickly say Hello World');

});

app.listen(port, () => {

console.log(`app is listening at port ${port} by Process ${process.pid}`);

});

Here, we have created a simple node express application with two GET routes:

1) /test: It is just returning a message.

2) "/": It is running a very large loop and then returning the message after few minutes

Now, if we first run the route "/" in one browser, it will start running and in the mean time if we trigger the "/test" route in another browser, it will be in waiting mode till the first one is completed. If we go through the

console.log(`Incoming req accepted by Process ${process.pid}`);

for both the routes in our terminal, we will find that both the requests were processed by same processId and that is why second route was waiting till first was running.

The above problem can be solved by using Node Js Cluster functionality. Here, we will create as many child processes as number of Core in our Operating system are present. Each of these child processes will listen to same port. This can be seen by below code:

const http = require('http');

const cluster = require('cluster');

const os = require('os');

const process = require('process');

const express = require('express');

const port = 8000;

const numCPUs = os.cpus().length;

console.log(`No of cpu = ${numCPUs}`);

//Creating child processes from main process based on number of cpus

if (cluster.isMaster) {

console.log(`Primary ${process.pid} is running`);

// Fork workers.

for (let i = 0; i < numCPUs; i++) {

cluster.fork();

}

cluster.on('exit', (worker, code, signal) => {

console.log(`worker ${worker.process.pid} died`);

cluster.fork();

});

} else {

const app = express();

app.get('/', (req, res) => {

console.log(`Incoming req accepted by Process ${process.pid}`);

for(let i=0; i<9999999999999999999; i++) {

}

res.send('hello world');

});

app.get('/test', (req, res) => {

console.log(`Incoming req accepted by Process ${process.pid}`);

res.send('Quickly say Hello World');

});

app.listen(port, () => {

console.log(`app is listening at port ${port} by Process ${process.pid}`);

});

}

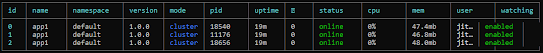

Here, the code os.cpus().length defines the number of cores in our OS and accordingly we are creating the child processes by using fork() function. Here, each child process is listening the port 8000.

Now, if we first run the route "/" it will start running and before its completion if we run the second route "/test" in another browser, it will be processed by another child process and it will not wait for the completion of first route. It will be completed immediately.

If we perform load testing, we will get very good result comparing to the first one. But, one thing important to note here is that, spawning lot of child processes are not helpful because each child process requires some resource and if number of child processes increases it may inversely impact the performance.

This is very simple way of implementation of cluster in node application but in Production environment, it may not be so simple. To automatically manage clustering in node application without manually writing this code, we can use a library named as PM2. In my next blog, I will discuss about it.